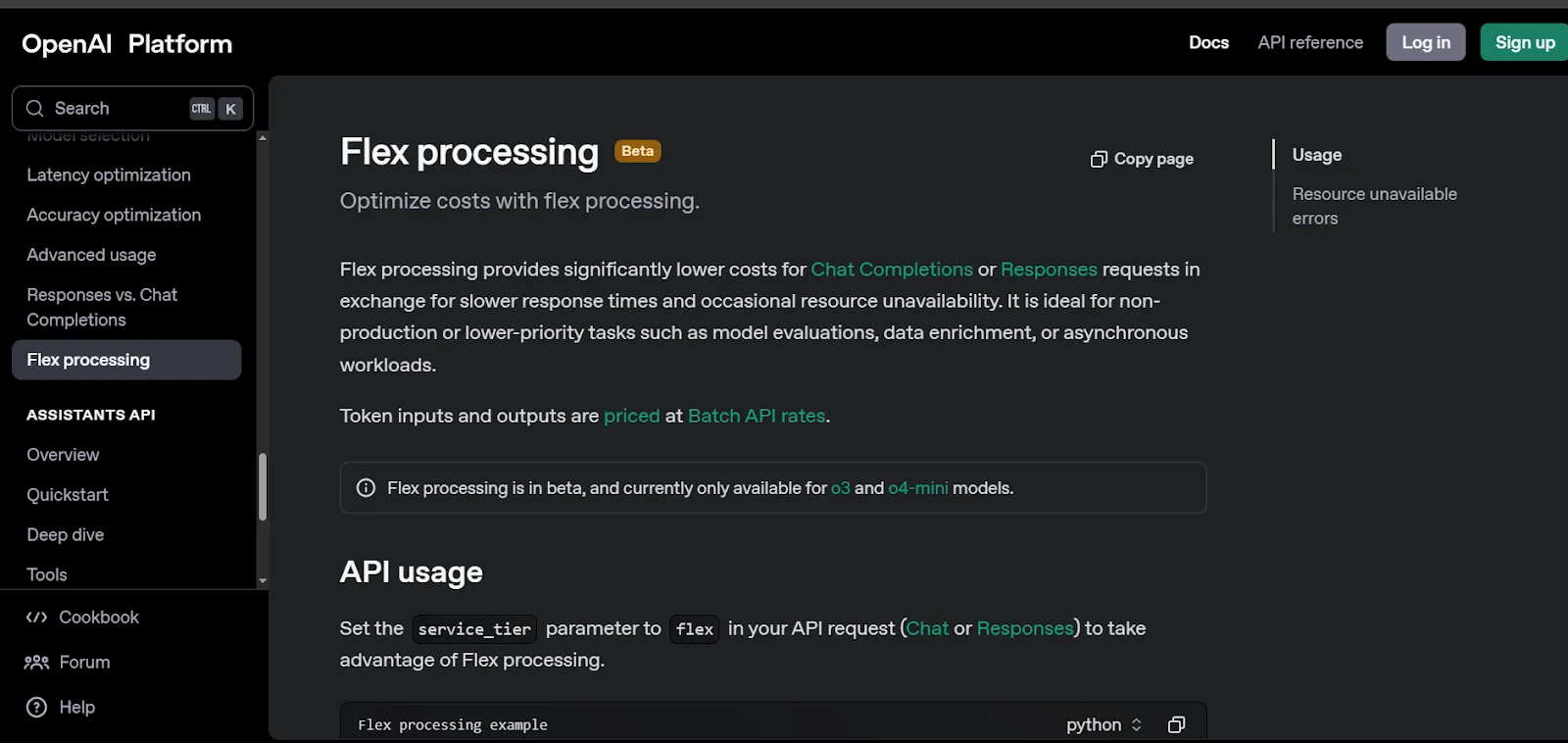

OpenAI is stepping up its game in the AI space with the launch of its new Flex processing option. This API update, aimed at giving developers a more affordable way to access OpenAI’s powerful models, is designed to provide cheaper processing for less urgent tasks. But there’s a catch — it comes with slower response times and occasional resource unavailability.

As OpenAI faces stiff competition from rivals like Google, the move to offer Flex processing is part of a broader strategy to make its tools more accessible to developers working on non-production tasks, data enrichment, model evaluations, and other asynchronous workloads.

2. What Is Flex Processing and How Does It Work?

Flex processing, currently in beta, is available for OpenAI’s latest reasoning models, o3 and o4-mini. These models are often used for tasks like evaluations, data analysis, and workload management that don’t require immediate results. The standout feature of Flex processing is its cost reduction. OpenAI is offering a significant price drop for tasks that don’t demand real-time processing:

- For o3, the price drops to $5 per million input tokens and $20 per million output tokens, down from the regular $10 per million input tokens and $40 per million output tokens.

- For the o4-mini, Flex processing cuts costs to just $0.55 per million input tokens and $2.20 per million output tokens, a sharp contrast to the regular rates of $1.10 per million input tokens and $4.40 per million output tokens.

These price reductions make it more affordable for smaller companies or individual developers to use OpenAI’s models without breaking the bank. However, the reduced price comes with the trade-off of slower processing speeds, which is something users will have to factor into their workflows.

3. A Competitive Move in the Rising AI Race

Flex processing arrives at a time when the cost of cutting-edge AI technology is climbing rapidly. OpenAI is facing increasing competition from Google, which recently launched Gemini 2.5 Flash. Google’s new model is designed to rival DeepSeek’s R1, offering similar performance at a much lower cost for input tokens.

Flex processing is OpenAI’s response to this growing trend of affordable, budget-friendly models. With the rise of cheaper alternatives, it’s clear that cost efficiency is becoming a key battleground for AI providers. By introducing Flex, OpenAI hopes to capture the attention of developers who are looking for cost-effective solutions without the need for high-end performance.

4. Who Can Access Flex Processing?

While the pricing change is a major draw, OpenAI has also introduced a new ID verification requirement for developers in tiers 1-3 of its usage hierarchy. Tiers are determined by how much money developers spend on OpenAI’s services. This additional step is aimed at ensuring security and preventing misuse of the models.

According to OpenAI, this ID verification process will help curb any potential issues with bad actors who could exploit the system. Developers who fall into these tiers will need to complete the verification process in order to access the o3 model and other models that require this extra layer of security.

5. Is Flex Processing Right for You?

For developers looking for lower-cost options for non-production tasks and asynchronous projects, Flex processing presents an excellent opportunity. It is not intended for high-priority, real-time work, but for those who can afford slower response times, it offers a great way to cut down on costs.

As OpenAI continues to innovate and expand its offerings, Flex processing is likely to evolve. It represents just one part of a broader trend toward more affordable AI solutions, where the balance between cost and performance will continue to shift.

With Flex processing, OpenAI is making it clear that they are committed to offering developers flexible, affordable options while also keeping pace with the competition. Whether or not it’s the right choice depends on the specific needs of the developers using it, but for budget-conscious teams, this could be a game-changer.